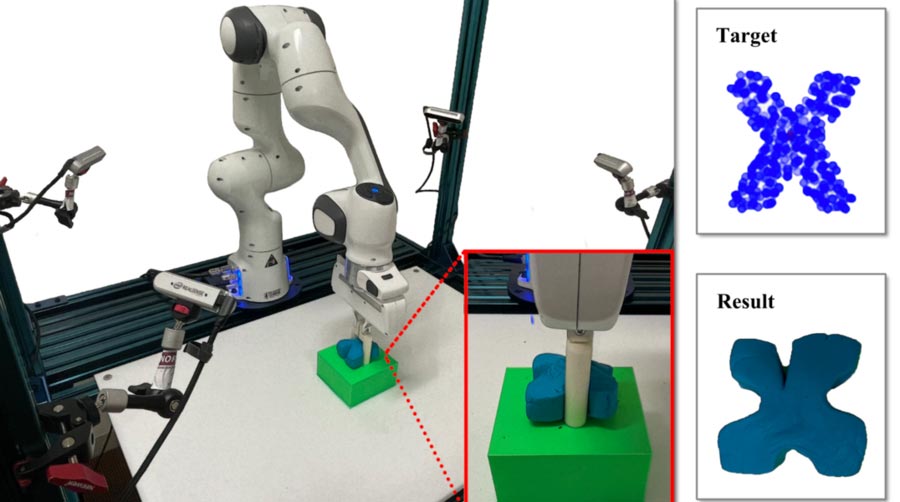

Researchers manipulate elasto-plastic objects into aim shapes from visual cues. Credit rating: MIT CSAIL

Robots manipulate at ease, deformable topic matter into diversified shapes from visual inputs in a contemporary plan that would sooner or later enable better dwelling assistants.

Many of us in level of fact feel an overwhelming sense of joy from our interior child when stumbling correct thru a pile of the fluorescent, rubbery mixture of water, salt, and flour that attach goo on the plan: play dough. (Even supposing this no longer ceaselessly ever happens in maturity.)

Whereas manipulating play dough is enjoyable and straight forward for 2-One year-olds, the shapeless sludge in all fairness nerve-racking for robots to handle. With rigid objects, machines enjoy change into increasingly extra decent, but manipulating at ease, deformable objects comes with a laundry list of technical challenges. One in every of the keys to the jam is that, as with most flexible constructions, whereas you happen to progress one portion, you’re possible affecting the total lot else.

No longer too lengthy in the past, scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Stanford University let robots select their hand at fidgeting with the modeling compound, but no longer for nostalgia’s sake. Their contemporary plan known as “RoboCraft” learns without lengthen from visual inputs to let a robotic with a two-fingered gripper hit upon, simulate, and shape doughy objects. It will probably probably presumably perhaps well reliably idea a robotic’s conduct to pinch and free up play dough to create diversified letters, alongside with ones it had never considered. In level of fact, with proper 10 minutes of information, the 2-finger gripper rivaled human counterparts that teleoperated the machine — performing on-par, and at instances even better, on the tested initiatives.

“Modeling and manipulating objects with excessive levels of freedom are vital capabilities for robots to learn enable complicated industrial and household interaction initiatives, respect stuffing dumplings, rolling sushi, and making pottery,” says Yunzhu Li, CSAIL PhD pupil and author of a contemporary paper about RoboCraft. “Whereas there’s been contemporary advances in manipulating attire and ropes, we found that objects with excessive plasticity, respect dough or plasticine — no matter ubiquity in those household and industrial settings — used to be a largely underexplored territory. With RoboCraft, we learn the dynamics units without lengthen from excessive-dimensional sensory knowledge, which presents a promising knowledge-pushed avenue for us to develop effective planning.”

When working with undefined, delicate materials, the entire construction wants to be thought to be sooner than any secure of ambiance friendly and effective modeling and planning would be done. RoboCraft uses a graph neural network because the dynamics model and transforms photography into graphs of minute particles alongside with algorithms to make extra true predictions relating to the matter matter’s alternate in shape.

RoboCraft proper employs visual knowledge as an replace of nerve-racking physics simulators, which researchers recurrently use to model and realize the dynamics and force acting on objects. Three parts work together within the plan to secure at ease topic matter into, issue, an “R,” as an illustration.

Thought — the first portion of the plan — is all about discovering out to “hit upon.” It employs cameras to earn raw, visual sensor knowledge from the ambiance, which could presumably perhaps perhaps be then was into puny clouds of particles to picture the shapes. This particle knowledge is worn by a graph-primarily primarily based mostly neural network to learn to “simulate” the thing’s dynamics, or the method in which it strikes. Armed with the coaching knowledge from many pinches, algorithms then aid idea the robotic’s conduct so it learns to “shape” a blob of dough. Whereas the letters are a puny sloppy, they’re definitely handbook.

Besides developing cutesy shapes, the crew of researchers is (finally) working on making dumplings from dough and a ready filling. It’s plenty to query for the time being with only a two-finger gripper. A rolling pin, a stamp, and a mildew could presumably perhaps well be further instruments required by RoboCraft (mighty as a baker requires diversified instruments to work successfully).

A further in the lengthy toddle enviornment the scientists envision is the usage of RoboCraft for support with tasks and chores, which could presumably perhaps perhaps be of particular aid to the elderly or those with restricted mobility. To hold this, given the a quantity of obstructions that would decide speak, a mighty extra adaptive representation of the dough or merchandise could presumably perhaps well be wanted, as well to an exploration into what class of units would be correct to mediate the underlying structural programs.

“RoboCraft truly demonstrates that this predictive model would be realized in very knowledge-ambiance friendly ways to idea circulate. Within the lengthy toddle, we are fascinated with the usage of diversified instruments to manipulate materials,” says Li. “Even as you’re taking into fable dumpling or dough making, proper one gripper wouldn’t be in a residing to resolve it. Serving to the model realize and hold longer-horizon planning initiatives, equivalent to, how the dough will deform given basically the hottest instrument, movements and actions, is a subsequent step for future work.”

Li wrote the paper alongside Haochen Shi, Stanford grasp’s pupil; Huazhe Xu, Stanford postdoc; Zhiao Huang, PhD pupil on the University of California at San Diego; and Jiajun Wu, assistant professor at Stanford. They’re going to clarify the analysis on the Robotics: Science and Systems conference in Fresh York Metropolis. The work is in portion supported by the Stanford Institute for Human-Centered AI (HAI), the Samsung World Study Outreach (GRO) Program, the Toyota Study Institute (TRI), and Amazon, Autodesk, Salesforce, and Bosch.